Across classrooms from Lagos to London, a quiet shortcut is rewriting how young people learn to think, feel, and belong in an AI-saturated world. Students are embracing chatbots as study buddies, emotional confidants, and ghostwriters, often faster than schools, regulators, and even parents can keep up.

A new Brookings blueprint argues that what happens next will decide whether AI becomes education’s greatest accelerator or its most subtle saboteur, especially for children whose cognitive and social foundations are still being built. It calls for a deliberate pivot: prosper, prepare, protect before today’s convenience hardens into tomorrow’s learning crisis.

Silent Trade-Offs In AI Classrooms

In just three years, generative AI has originated from novelty to near-utility for hundreds of millions of users, including large numbers of school-age children who now rely on chatbots to brainstorm, translate, summarise, and even “humanise” assignments.

In a Brookings study covering 505 students, teachers, parents, and experts across 50 countries, educators describe AI as “the fast food of education”, tempting, efficient, and quietly corrosive when it becomes the main diet of learning.

The report warns that while AI can extend tutoring, assist neurodivergent learners, and free teacher time, current patterns of use are creating something more troubling: a “great unwiring” of students’ cognitive capacities through habitual offloading of reading, writing, and reasoning to machines. In focus groups, over half of all responses on harm centred on threats to students’ ability to think for themselves, with teachers reporting that learners increasingly view schoolwork as instruments to be ticked by AI rather than problems to be wrestled with in their own minds.

When Benefits Lose To Hidden Risks

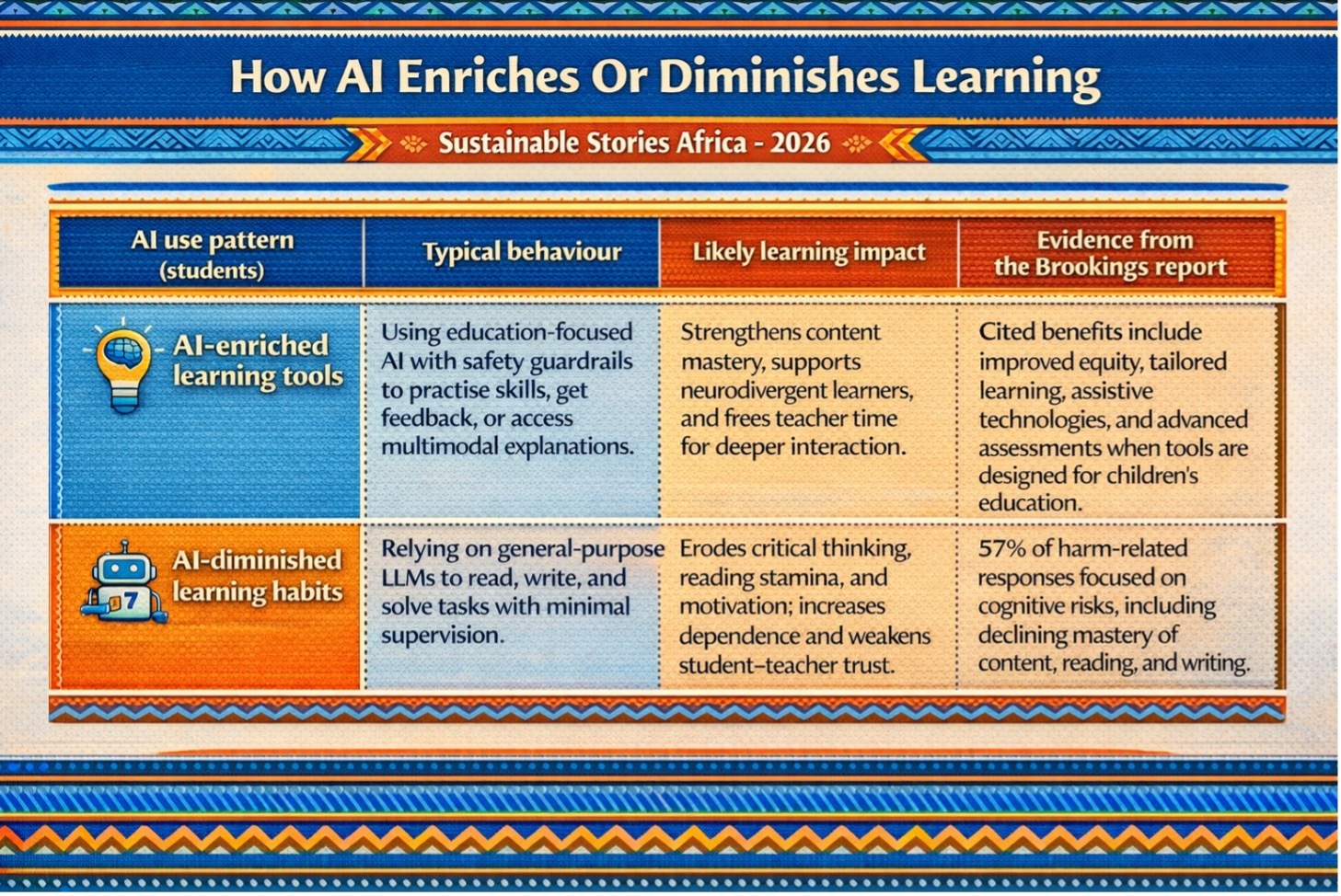

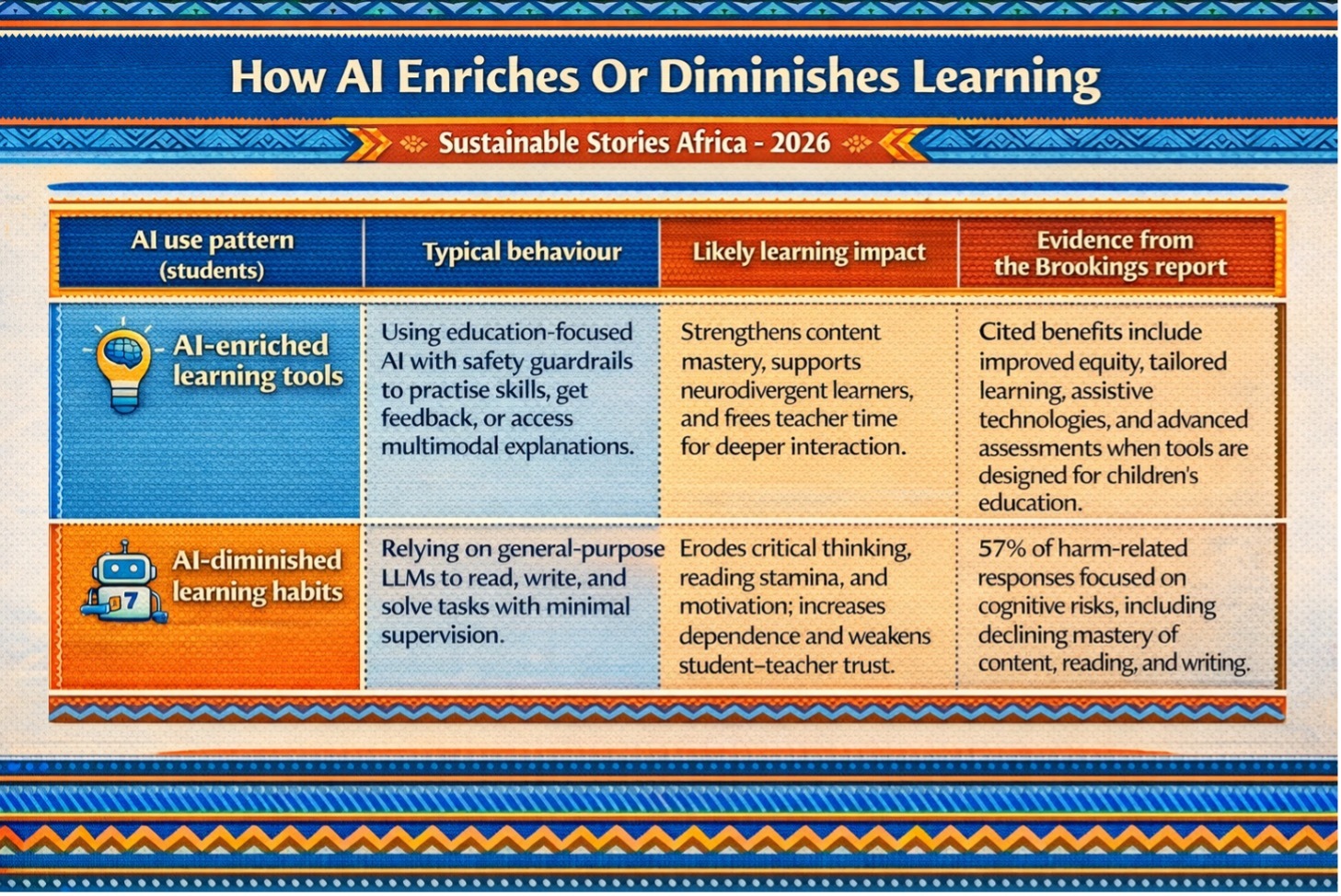

At the heart of the Brookings analysis is a stark conclusion: under present conditions, AI’s risks to children’s learning and development outweigh its benefits. The report distinguishes between AI-enriched learning, where tools deepen relationships among students, teachers, content, and parents, and AI-diminished learning, where those same relationships are weakened by dependence, mistrust, and exclusion.

AI enriches learning when it translates content into local languages, scaffolds complex texts with visuals, or provides nuanced feedback that helps teachers tailor instruction and enables students to reflect on their progress.

However, in classrooms and bedrooms where general-purpose chatbots dominate, often without guardrails or guidance, AI instead undermines trust, blurs authorship, and pushes students into “passenger mode”: present in class, compliant on paper, but mentally checked out and outsourcing the hard work of understanding.

How AI Enriches Or Diminishes Learning

| AI use pattern (students) | Typical behaviour | Likely learning impact | Evidence from the Brookings report |

|---|---|---|---|

| AI-enriched learning tools | Using education-focused AI with safety guardrails to practise skills, get feedback, or access multimodal explanations. | Strengthens content mastery, supports neurodivergent learners, and frees teacher time for deeper interaction. | Cited benefits include improved equity, tailored learning, assistive technologies, and advanced assessments when tools are designed for children’s education. |

| AI-diminished learning habits | Relying on general-purpose LLMs to read, write, and solve tasks with minimal supervision. | Erodes critical thinking, reading stamina, and motivation; increases dependence and weakens student–teacher trust. | 57% of harm-related responses focused on cognitive risks, including declining mastery of content, reading, and writing. |

Offloading Thinking, Rewriting Childhood

The report’s most unsettling insight is not that AI makes cheating easier, but that it is reshaping what it feels like to learn as a child. Teachers recount students who no longer take notes or do readings because they “will inevitably rely on AI later,” with one expert warning that AI is turning students from “producers” into “consumers” of knowledge.

This dynamic taps into a familiar human tendency: we offload cognitive work whenever tools make it possible, from calculators to map apps. The difference now, Brookings argues, is that developmental students’ brains are still wiring core capacities for attention, memory, and self-regulation, and AI’s frictionless assistance strips away the productive struggle that builds those muscles. Used liberally, AI “is not a cognitive partner; it is a cognitive surrogate,” diminishing rather than accelerating children’s growth in thinking.

The Cognitive Offloading Spiral

| Stage in a spiral | What students do | Short-term payoff | Long-term risk |

|---|---|---|---|

| Try AI for “efficiency” | Use AI to draft or summarise assignments they could do themselves. | Faster completion, better grades, less effort. | Early erosion of stamina for reading, writing, and problem-solving. |

| Normalise outsourcing | Routinely bypass difficult texts or tasks, expecting AI to handle them. | School feels easier; deadlines feel less stressful. | Declining content knowledge, weaker critical thinking, and shallow understanding. |

| Depend on AI to perform | Feel unable to complete work or think deeply without AI support. | Continued performance in grade terms. | Reduced agency, blurred sense of authorship, and vulnerability in an AI-intensive labour market. |

Social Lives, Trust Lines, And Safety Nets

Beyond cognition, the report highlights how AI is quietly redrawing the social map of childhood. Young people now confide in AI “friends” for relationship advice and emotional support, often in parallel to or instead of peers, teachers, and parents.

While some students describe these tools as lifelines that extend their world and ease isolation, others slide towards dependence, isolation, and, in extreme cases, self-harm, echoing earlier patterns seen with social media.

The trust impacts run both ways: teachers increasingly doubt the authenticity of student work, and students report uncertainty over whether AI outputs are accurate, unbiased, or private. Brookings warns that without stronger governance and child-centred design, AI will deepen inequities, favouring well-resourced systems with curated tools while leaving marginalised learners exposed to generic, unsafe, or linguistically exclusionary platforms.

Prosper: Reclaiming Learning With AI

In response, the report proposes a three-pillar framework: Prosper, Prepare, Protect, challenging governments, funders, companies, schools, families, and students to each pick at least one concrete action to advance over the next three years.

Under Prosper, the priority is to transform teaching and learning so that AI amplifies, rather than replaces, human judgment, curiosity, and connection.

That starts with “carefully titrated AI use”: knowing when to teach with and without AI, and refusing to deploy tools that short-circuit metacognition, self-regulation, or critical student–teacher relationships.

It also means co-designing education-facing AI with educators, students, and communities, including those on the margins, so that algorithms are built around evidence-based pedagogy and local realities rather than convenience or engagement metrics.

Prepare: Literate, Ready, And Responsive

The Prepare pillar focuses on building a shared literacy about how AI actually works and does not work among students, educators, families, and system leaders. Brookings calls for holistic AI literacy that covers basic concepts, limitations, cognitive and emotional impacts, and practical strategies for verifying information and resisting unhealthy dependence.

Professional development is central here, with teachers equipped not just to “use tools” but to redesign tasks, assessments, and classroom norms in ways that make AI a scaffold rather than a substitute.

System planners, meanwhile, must move beyond hype to articulate clear visions for the ethical use of AI in education, backed by policies that ensure equitable access to safe tools instead of a patchwork of unregulated experimentation.

Protect: Guardrails Before Growth Curves

Protect, the third pillar, confronts the uncomfortable reality that many of today’s AI systems were never designed with children in mind.

The report urges regulators and technology firms to embed protections at the design stage, covering data privacy, safety, emotional well-being, and cognitive and social development, rather than retrofitting safeguards after harms have emerged.

Recommendations range from child-friendly product standards and safety stress tests to family-facing guidance on healthy AI use at home.

Crucially, Brookings stresses that governance cannot stop at codes of conduct or procurement clauses; it must extend into classroom practice, with adults modelling responsible use and schools drawing clear lines around when AI must be switched off so that children can wrestle with ideas on their own.